Rent GPU

infrastructure

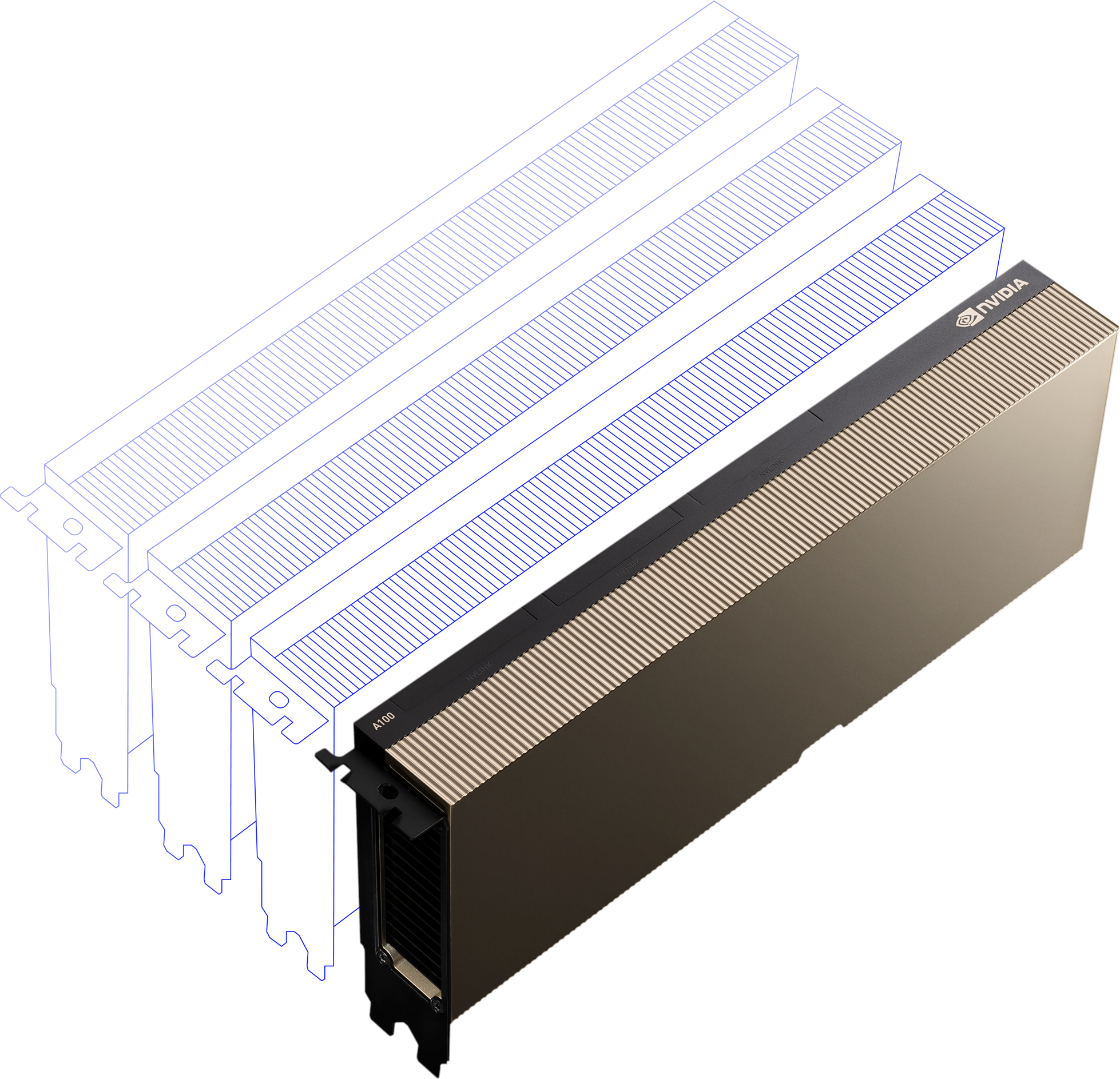

Deploy dedicated NVIDIA A100 GPUs for AI

On-premises deployment or a private cloud solution

Long-term GPU commitments for predictable pricing

Cheaper Nvidia A100 GPUs

Located in the EU

Templates with popular machine learning tools

Save with committed usage

Commit to consistent usage of GPU-hours and get reserved GPUs under a discounted price.

6 months

commitment

commitment

$1.12

for 1 hour of

1x Nvidia A100 40GB

24 vCPU

128GB memory

1 year

commitment

commitment

$0.99

for 1 hour of

1x Nvidia A100 40GB

24 vCPU

128GB memory

2 years

commitment

commitment

$0.9

for 1 hour of

1x Nvidia A100 40GB

24 vCPU

128GB memory

3 years

commitment

commitment

$0.82

for 1 hour of

1x Nvidia A100 40GB

24 vCPU

128GB memory

Looking for More Performance?

Get dedicated NVMe disks on demand for an additional fee or rent bare metal servers for maximum control and performance.

Running on professional server platforms

*AMD, and the AMD Arrow logo, AMD EPYC and combinations thereof are trademarks of Advanced Micro Devices, Inc.

AMD EPYC™ CPU

With AMD EPYC processors you can allocate 196 vCPU and 10 GPU in one instance.

Flexible NVMe®-based data storage

Fast, reliable data storage for your datasets and trained models, runtime-extensible up to 8TB.

Fast ECC RAM

ECC 2.9Ghz memory.

Private AI Infrastructure

Without the Complexity

Deploy High-Performance AI Workloads Without the Overhead

Turnkey AI Clusters

Custom-built solutions tailored to your business needs.

Kubernetes out of the box

Enterprise-grade Kubernetes environments optimized for AI workloads.

Flexible data storage

Fast, runtime-extensible data storage for your datasets and trained models.